When investing, your capital is at risk. The value of investments can go down as well as up, and you may get back less than you put in. The content of this article is for information purposes only and does not constitute personal advice or a financial promotion.

The real question behind “Who is the next Nvidia?”

When people ask who could be “the next Nvidia or Broadcom” over the next five years, they are not really asking for a stock tip.

They are asking something more specific:

Which companies sit in the same kind of choke-points that Nvidia and Broadcom have owned in this AI cycle?

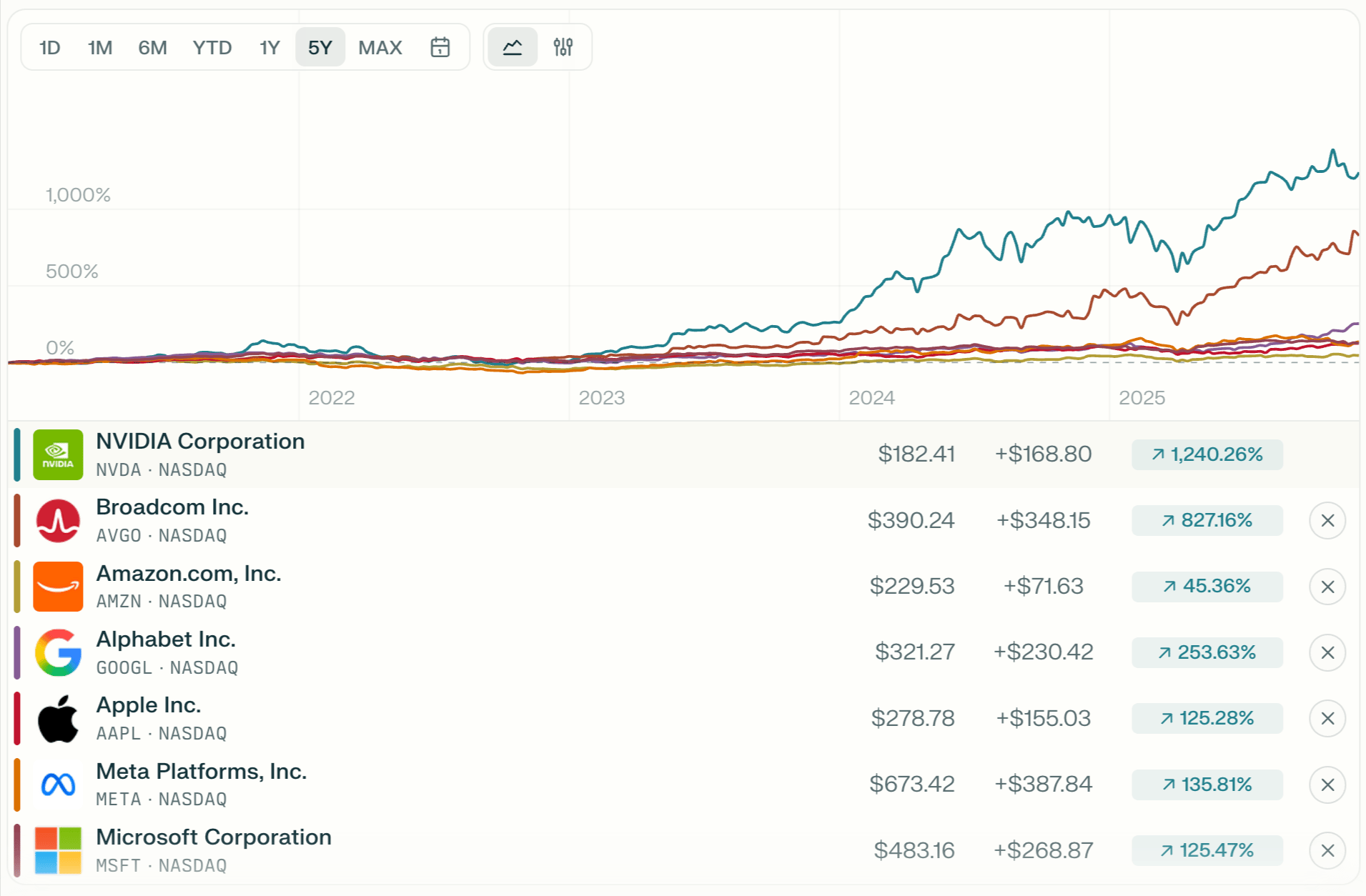

Over the last five years, Nvidia and Broadcom have turned every dollar of shareholder capital into roughly $10 to $13, including dividends, as AI data centres soaked up GPUs and custom networking silicon at an extraordinary pace. Nvidia grew by 1,240% and Broadcom by 827%.

5y Growth for Nvidia and Broadcom vs other stocks

Those returns came from three ingredients:

A structural tailwind (AI compute spending)

A defensible position in the stack (CUDA, high-end GPUs, custom ASICs, networking)

Operating leverage as volumes scaled faster than costs

The aim is not to find another Nvidia clone. It is to look for critical infrastructure, pricing power, and long demand runways in and around AI.

Pick and shovel hardware: the closest cousins to NVDA and AVGO

These are the companies that sit closest to the physical bottlenecks of AI compute.

1. TSMC $TSM ( ▼ 0.52% ): The AI foundry

Role in the stack

TSMC is the contract manufacturer behind many of the world's most valuable chips, including Nvidia’s Hopper and Blackwell AI accelerators, AMD’s Instinct line, and Apple’s high-end processors.

Tailwind

Artificial intelligence is pushing more workloads onto leading-edge nodes. TSMC has already seen quarterly revenue almost double in recent years, helped by AI data centre demand, and it has raised its 2025 revenue growth outlook to the mid-30 percent range, explicitly on the back of AI orders.

Why does it rhyme with Nvidia and Broadcom?

Nvidia and Broadcom sit at the “brains” and the networking of AI systems. TSMC sits at the factory gate. If hyperscalers keep spending on advanced chips, a large share of those dollars has to pass through TSMC’s fabs.

The risks are also clear: geopolitical concentration in Taiwan, plus the capital intensity of continuously building and upgrading cutting-edge fabs.

2. ASML $ASML ( ▼ 0.67% ): The EUV tollbooth

Role in the stack

ASML supplies the extreme ultraviolet (EUV) lithography machines that TSMC and other foundries need to print the smallest AI chips. It is, in practical terms, the only game in town for EUV.

Moat

Decades of R&D, complex supply chains, and system costs that can exceed 300 to 350 million dollars per tool create a natural barrier to entry. ASML controls more than 90 per cent of the EUV market and a dominant share in older lithography as well.

Why does it rhyme with Broadcom?

Broadcom dominates certain custom-silicon and networking niches that everyone else relies on. ASML does something similar at the tool level. Every move to more advanced AI chips tends to require more ASML equipment and services, and the installed base generates recurring service revenue.

The flip side is that ASML is tied to its customers' capex cycle. A pause in leading-edge expansion would show up directly in orders.

3. Super Micro Computer $SMCI ( ▲ 8.25% ): The AI server assembler

Role in the stack

Super Micro designs and ships servers and racks that bundle Nvidia or AMD accelerators into ready-made systems for data centres. It is effectively a high-speed, high-mix “factory” for AI infrastructure.

Recent pattern

Growth has been explosive in recent years as AI orders surged, though guidance has been choppy amid shifting delivery timelines. Analysts regularly highlight both the upside of AI server demand and the need for the company to prove it can sustain margins and free cash flow through the cycle.

Why does it rhyme with Nvidia?

Super Micro is one step further down the value chain. It is not designing the chips but is deeply plugged into the AI system builds. That gives it leverage to AI data centre capex, but with more cyclicality and execution risk than an entrenched chip designer.

Second wave AI silicon and memory

Nvidia dominated the first wave of AI returns. The second wave is more crowded. A few names stand out.

1. AMD $AMD ( ▲ 1.62% ): The alternative accelerator

Role in the stack

AMD designs CPUs and AI accelerators that compete directly with Nvidia at both the compute and system levels.

AI traction

The MI300 and MI350 accelerator families have been ramping through 2025, with AMD securing large multi-year deals with cloud providers and infrastructure partners. Public commentary from the company now pegs the AI data centre as a multi-hundred-billion-dollar opportunity, with AMD expecting its own AI data centre revenues to grow at very high double-digit rates over the next three to five years.

Why it matters

In simple terms, every dollar that is not spent on Nvidia accelerators has to go somewhere. To the extent that customers seek a second source, AMD is one of the few realistic options at scale.

The trade-off is that AMD is still fighting for share in a market where Nvidia has a large installed base, deep software moats, and strong customer relationships.

2. Micron $MU ( ▼ 0.86% ): memory for the AI supercycle

Role in the stack

Every AI system needs high-bandwidth memory (HBM) and DRAM. Without enough fast memory, GPUs sit idle.

Strategic pivot

Micron has recently announced that it will exit its Crucial branded consumer memory business by early 2026 to focus resources on higher margin AI centric products such as HBM for AI accelerators and advanced data centre DRAM.

This aligns with a broader industry pattern in which demand for HBM, driven by AI workloads, is tightening supply and pushing up prices.

Why does it rhyme with the AI story?

Micron does not have Nvidia-style margins, and memory remains cyclical. However, if AI-related memory demand remains tight for several years, the combination of higher prices and a mix shift toward HBM could produce better-than-average earnings power from current levels.

Software, data and security platforms riding AI infrastructure

Not every beneficiary of AI capex prints chips. A second group lives higher up the stack, monetising data, observability and security.

Examples include:

Snowflake $SNOW ( ▲ 1.77% ): a data cloud used for analytics and AI workloads

Datadog $DDOG ( ▼ 0.97% ): observability for distributed, increasingly AI-heavy infrastructure

CrowdStrike $CRWD ( ▲ 1.54% ) and Palo Alto Networks $PANW ( ▼ 0.89% ) are security platforms that benefit from more endpoints, more cloud and more complex attack surfaces.

These companies tend to share a few characteristics: high gross margins, subscription revenue and large addressable markets. Where growth persists, those economies can support long periods of compounding.

The link to AI is less direct than for chipmakers or foundries. Their outcomes depend not only on AI spend but also on competition, pricing power, and the usual software factors such as customer retention and upsell.

Other potential compounding engines around the AI stack

Beyond the most obvious names, a few other groups sit in interesting positions.

1. Networking and design tools

Arista Networks $ANET ( ▼ 1.66% ) supplies high-speed networking hardware that ties AI clusters together. Revenue has been growing at roughly the high twenties year-over-year, with management explicitly describing this period as a strong phase for demand in cloud and AI networking.

Synopsys $SNPS ( ▲ 0.03% ) and Cadence $CDNS ( ▼ 2.76% ) provide the software tools used to design chips. As more companies, from cloud providers to carmakers, build custom silicon, demand for advanced electronic design automation has been growing faster than the broader semiconductor industry.

These businesses do not usually get the same headlines as GPU vendors, but they sit at key points in the supply chain that can benefit from higher design complexity and AI-specific chips.

2. IP and edge compute

Arm Holdings $ARM ( ▼ 0.24% ) licenses architectures that underpin a large share of mobile and edge devices. As more AI inference runs at the edge, rather than solely in the cloud, Arm-based designs can capture some of that shift through royalties and licenses.

Arm’s growth path depends on how quickly AI workloads migrate toward phones, laptops, vehicles and embedded devices, and how much of that traffic runs on Arm-based designs.

3. Higher risk, longer-dated themes

A final group sits further out on the risk spectrum:

Quantum computing names such as $IONQ ( ▲ 0.27% ) or Rigetti $RGTI ( ▲ 2.6% ), which could complement classical AI in specific workloads if the technology reaches a useful scale

Specialised infrastructure providers and data centre operators that are retooling for AI-heavy loads

The potential upside is considerable if their markets develop as hoped, but the path is far more uncertain and often hinges on technology, financing and regulation.

How some investors frame this space

There is no single correct way to approach this. A common framework among long-only investors looks something like this:

Start from themes rather than single names

The focus is on clusters such as AI compute, memory, networking, design tools and software, rather than on a single heroic stock. TSMC, ASML, AMD, Micron, Super Micro, Arista, Synopsys, Cadence, and others all sit at different points in the same AI value chain.Separate “core” from “satellite” exposure

Large, diversified platforms such as the original Magnificent 7 often sit in the core of a portfolio. More focused AI infrastructure and software names then act as satellites around that core, with smaller individual weights.Treat past outliers as context, not templates

Nvidia and Broadcom delivered multi-hundred percent, even four-digit percentage, total returns over five years.

That combination of rapid earnings growth and multiple expansion from prior starting valuations is unusual. Future winners may compound strongly without repeating that exact path.

In practice, the interesting question is not “which stock will be the next Nvidia”, but where the durable bottlenecks and platforms are in this AI build out, and how their economics look from today’s prices.

Disclaimer: This publication is for general information and educational purposes only and should not be taken as investment advice. It does not take into account your individual circumstances or objectives. Nothing here constitutes a recommendation to buy, sell, or hold any investment. Past performance is not a reliable indicator of future results. Always do your own research or consult a qualified financial adviser before making investment decisions. Capital is at risk.

Markets move fast. Savvy investors spot trends early.

Every Monday, get my pre-market cheat sheet:

✅ What’s hot

✅ What’s fading

✅ Where smart money’s flowing

No hype, just data.

👉 Subscribe to stay ahead.