When investing, your capital is at risk. The value of investments can go down as well as up, and you may get back less than you put in. The content of this article is for information purposes only and does not constitute personal advice or a financial promotion.

The supposed irony: “Everyone is racing to not need Nvidia”

Scroll through X for a day, and you will see the same storyline on repeat:

Google trains on TPUs now

Meta is building its own silicon

Amazon has Trainium and Inferentia

Microsoft is working with AMD and others

The punchline is simple:

Nvidia is printing money today from customers who are building its replacement for tomorrow.

It sounds clever. It fits nicely into a contrarian thread. It is also a very incomplete picture of what is actually happening inside hyperscale data centres.

Big Tech is not trying to wake up one day to find itself with zero exposure to Nvidia. They are trying to stop their Nvidia invoice from compounding faster than their revenue.

To understand why they still cannot escape Nvidia, you have to stop thinking in terms of “chips” and start thinking in terms of “platforms”.

What Big Tech is really doing: lowering the bill, not cutting the cord

Google, Meta, Amazon, Microsoft and Apple design their own silicon for three practical reasons:

Their workloads are huge.

Their infrastructure costs are higher.

Their software stacks are unique.

Owning some of the silicon lets them optimise specific workloads. A TPU can be tuned tightly to Google’s internal stack. Trainium can be optimised for AWS customers who are willing to adopt a new toolchain in exchange for a lower cost per token.

That is very different from trying to recreate Nvidia’s entire AI platform.

A custom chip can replace Nvidia for certain jobs. None of them today replicates Nvidia’s full combination of hardware, software, networking, and developer ecosystem.

Nvidia’s real moat: a software platform that happens to sell chips

Nvidia’s dominance did not start with crypto mining or gaming GPUs. It began when Nvidia built the operating system for accelerated compute and then spent more than a decade convincing the world to live inside it.

1. CUDA: the invisible operating system of AI

CUDA explained

CUDA launched in 2006 as a way to program GPUs for general computing. Nearly twenty years later, it underpins:

Millions of developers

Thousands of libraries and tools

First-class support in PyTorch, TensorFlow and JAX

Most cutting-edge AI research still starts with “PyTorch on Nvidia” because it is the path of least resistance. Moving away is not like changing phones. It is like replatforming a company-wide tech stack.

Alternatives exist. PyTorch can export to ONNX, AMD has ROCm, and there are serious efforts to target TPUs more directly. But the gravitational pull of CUDA is still strong.

CUDA is why Nvidia isn’t just selling chips; it’s selling the entire ecosystem those chips run on.

That makes Nvidia very hard to replace.

2. Tensor Cores: silicon shaped to transformers

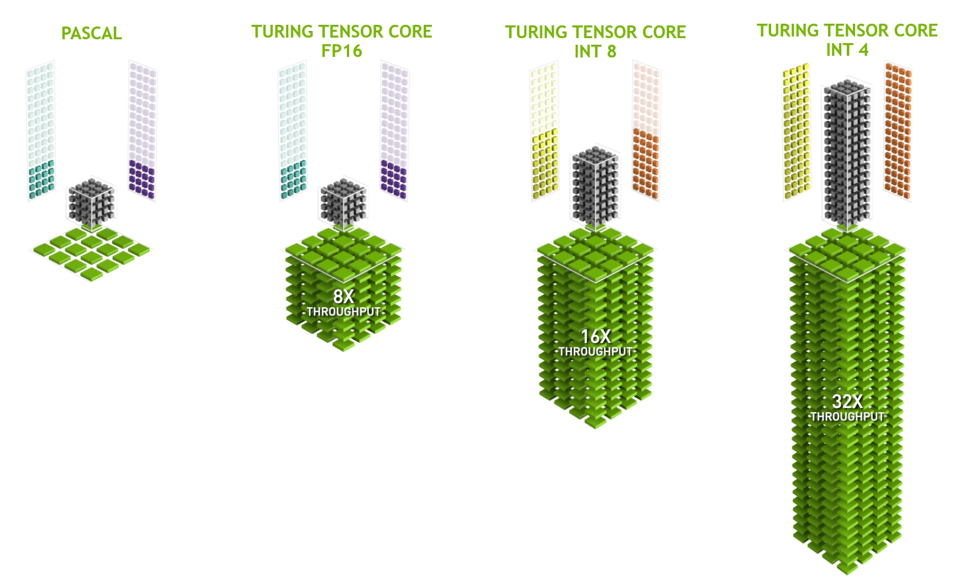

From Volta onward, Nvidia added Tensor Cores, specialised units for matrix multiplication and the kind of linear algebra that transformers live on. That design choice lined up almost perfectly with the transformer wave that started in 2017.

Every new architecture since then has been tuned around this pattern: more Tensor Core throughput, better sparsity, better utilisation for attention-heavy models.

Tensor Cores enabled Nvidia to accidentally but perfectly build the world’s default hardware for the transformer era before the transformer era even began.

And that single design choice now prints tens of billions per quarter.

3. Full-stack vertical integration

Nvidia does not just sell a chip. It sells:

GPUs

Networking, through the Mellanox acquisition

NVLink interconnects inside systems

DGX and HGX reference systems

cuDNN, TensorRT and CUDA libraries

Scheduling and orchestration tools

Now, inference microservices through NIM

That is a stack from bare metal through to deployment. Rivals have strong pieces of the puzzle, but no one currently owns all of it in a single vendor-controlled package.

Competitors have pieces of the puzzle. Nvidia owns the whole puzzle.

And that’s why every big tech company still runs the bulk of its AI on Nvidia, not because they love paying Nvidia, but because no one else offers a full “all-in-one” AI stack.

4. A 10 to 15-year head start

You can design a great chip in a few years. You cannot recreate fifteen years of accumulated tooling, tutorials, university courses, research code, enterprise integrations and habits that assume “there is a CUDA kernel for this”.

That time advantage is Nvidia’s real compounding asset.

Case study 1:

Google, the TPU leader that still buys Nvidia at scale

If anyone looks like a credible “we do not need Nvidia” story, it is Google.

By 2025, estimates suggest Google has AI compute capacity equivalent to about one million H100-class accelerators, combining roughly 400,000 Nvidia GPUs with around 600,000 TPUs.

TPUs are not a side project. They power large parts of Google’s internal training and many production services, and they can offer better price performance for some workloads.

So why does Google still buy Nvidia hardware?

External customers want the flexibility of standard Nvidia instances.

Many open-source tools, research projects, and third-party models assume CUDA first.

TPUs are excellent for specific patterns, but Nvidia remains the default for general-purpose, mixed-workload AI.

This is not “Google secretly cannot get rid of Nvidia”. It is Google running a portfolio of compute options, with Nvidia as one of the core pillars.

Case study 2:

Meta “good customers for Nvidia” and still diversifying

Meta’s CEO, Mark Zuckerberg, has publicly discussed building massive GPU fleets: around 350,000 Nvidia H100S GPUs and roughly 600,000 H100-equivalent GPUs in total by the end of 2024.

He has also described Meta’s H100 clusters as the workhorses behind Llama training. That is not the language of a company that has moved on from Nvidia.

At the same time, Meta is:

Working with other vendors, such as AMD, for some deployments

Rolling out its own inference-optimised accelerators for specific services

Again, the pattern is clear. Nvidia sits at the centre of the training stack. Custom and alternative silicon chips take slices of the workload where they make economic sense.

Case study 3:

AWS and Microsoft have more options, not less Nvidia

AWS is the largest cloud platform and a leading GPU cloud provider. It has spent years building an Nvidia-based GPU business and markets that capacity aggressively to customers.

In parallel, AWS is pushing hard on Trainium. Project Rainier, built around nearly half a million Trainium2 chips, is already running workloads for partners such as Anthropic and is expected to scale toward one million Trainium2 chips by 2025.

Microsoft follows a similar pattern:

Very large Nvidia deployments for Azure and for partners such as OpenAI

Investment in alternative accelerators and partnerships to avoid single supplier dependence

In both cases, the story is not “Nvidia is being swapped out”. It is “Nvidia is one anchor tenant in a data centre where the landlord wants more than one major client”.

The numbers: this is not what displacement looks like

Nvidia’s latest reported quarter, Q3 fiscal 2026, is not a company being quietly written out of the script. It is a company at the centre of the AI build-out:

Record revenue of 57.0 billion dollars, up 62 percent year on year

Data centre revenue of 51.2 billion dollars, up 66 percent year on year

Guidance that points higher again into Q4

You do not go from single-digit billions to 57 billion dollars of quarterly revenue in a little over two years if your largest customers are actually replacing you at scale.

What is happening instead is more subtle:

Custom chips expand total AI compute supply

Specialised accelerators take slices of workloads where economics are compelling

Nvidia responds by raising the performance bar with architectures like Blackwell and by deepening its software stack

The result is a larger market with greater segmentation, but still with Nvidia at the centre of both the most demanding and the most general-purpose workloads.

The real “irony”: the harder they pull away, the deeper the stack gets

The common narrative says:

Nvidia is selling chips to companies building its replacement.

The reality is closer to this:

Every time a hyperscaler invests in its own silicon, it confirms that AI infrastructure is a long-lived capital theme rather than a short hype cycle.

Every time a new accelerator appears, Nvidia has an incentive to push harder on performance, software and integration.

Every capacity expansion in TPUs, Trainium or custom ASICs increases model ambition, which often still lands on Nvidia for the most complex training runs.

Big Tech is not trying to turn Nvidia into zero. They are trying to turn “100 percent Nvidia everywhere” into “Nvidia for the hardest things, our own silicon for the things we can standardise”.

From Nvidia’s point of view, that is not a comfortable monopoly, but it is still dominance.

Infrastructure vs chips: the Buffett style framing

There is a neat narrative that goes:

Infrastructure is the real moat, so the better bet is on cloud and platforms, not on the chip vendor.

Alphabet’s recent appeal to long-term value investors fits that story. It combines a large AI footprint, strong cash flows and significant control over its internal hardware and software stack.

The catch is that Nvidia no longer looks like a commodity chip vendor. It looks increasingly like an infrastructure company that happens to make its own silicon:

Compute layer through GPUs

Networking through Mellanox and NVLink

Software layer through CUDA, cuDNN, TensorRT and NIM

Development layer through SDKs and frameworks

Deployment layer through tightly integrated systems and services

That is why hyperscalers are comfortable spending tens of billions of dollars a year on Nvidia, even as they build alternatives. They are buying a stack, not just a part.

Final take: Nvidia is the platform, everyone else is negotiating the rent

Big Tech is not secretly about to switch Nvidia off. They are:

Moving specific workloads to their own silicon, where the economics are clearly better

Keeping Nvidia at the centre of general-purpose training and many high-end deployments

Using competition to negotiate price, shape roadmaps, and diversify risk

The short version:

Nvidia has become the default AI compute platform.

Custom chips are the cost control mechanism at the edges.

That is not what replacement looks like. That is what dominance looks like when the customers are powerful enough to negotiate.

Disclaimer: This publication is for general information and educational purposes only and should not be taken as investment advice. It does not take into account your individual circumstances or objectives. Nothing here constitutes a recommendation to buy, sell, or hold any investment. Past performance is not a reliable indicator of future results. Always do your own research or consult a qualified financial adviser before making investment decisions. Capital is at risk.

Markets move fast. Savvy investors spot trends early.

Every Monday, get my pre-market cheat sheet:

✅ What’s hot

✅ What’s fading

✅ Where smart money’s flowing

No hype, just data.

👉 Subscribe to stay ahead.